Research Activities

We study mathematical models and algorithms intending to computationally reproduce human’s natural language communication. Here are examples of our major research topics currently in progress.

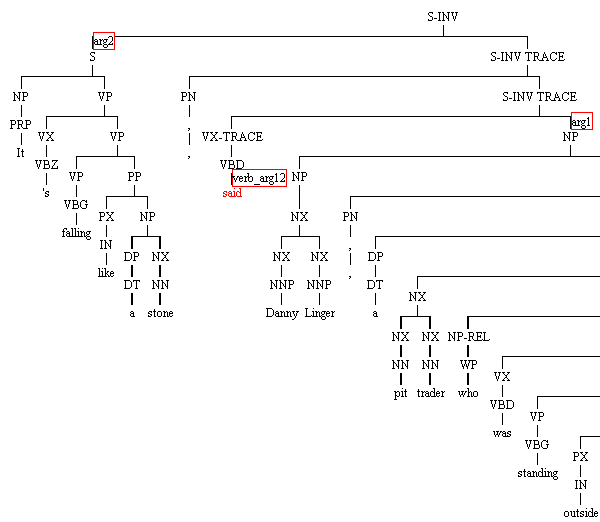

Syntactic Parsing

We study theories and technologies that make computers parse natural-language sentences and understand the meanings of sentences. Our research includes improvement of accuracy and speed of parsing as well as grammar development/acquisition. Enju parser that can output semantic structures of sentences in English, Japanese and Chinese is a good example of our achievement. We studied not only English or Japanese, but also Chinese, Vietnamese, and Amharic. We participate in an international project “Universal Dependencies” to develop multilingual resources and are engaged in data development in Japanese.

Semantic Analysis

In natural language, one meaning can be expressed by different linguistic expressions, at the same time one expression can have several different meanings. For example, “downpour” and “heavy rain” represent similar weather. Why do we know it? Humans usually can judge words mean “the same” or “different” without much thinking about the meaning of words, but it is not so easy to make computers do the same. In what situation do humans judge that two different linguistic expressions mean the same? We try through our research to uncover those mechanisms.

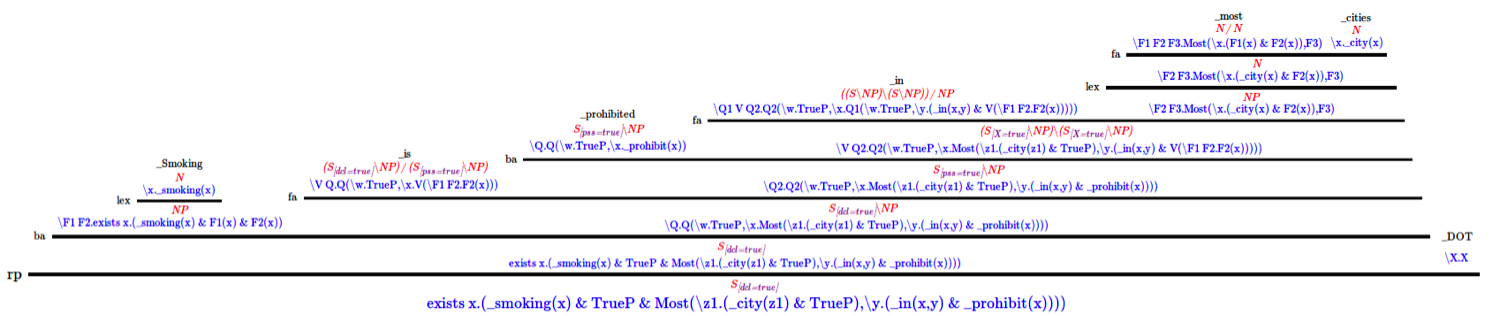

Specifically, we study recognition of inference in texts as known as RTE (recognizing textual entailment); a technology which automatically recognizes whether the meaning of two different sentences are equivalent or not. One of our research worth noting is a study on recognition of inference models which combines inferences based on formal logic with machine learning method for large-scale data.

We also study semantic parsing which converts natural language text into formal representations such as predicate logic formula or database query. If we can convert natural language text into predicate logic formula, we can solve recognition of inference in texts as inferences among logic formulas. If a sentence of inquiry can be converted into database query, we will be able to inquire database in natural language, and this is one of the grounding (more about that later).

Grounding

In addition to our verbal communicate through natural language, we obtain various nonverbal information visually, tactilely, etc. We can understand very well by combining verbal and nonverbal information together. For example, looking at a picture of mountain scenery, following conversation may take place: “Where did you take this picture?” “Tomamu. I visited there last week.” “For skiing?” We make such conversation unconsciously, and we do not know how we integrate various kinds of information to understand well.

Grounding is a study to link a concept described in natural language with nonverbal information. Among various kinds of nonverbal information, we especially focus on images, database, and chronological numeric data. For example, we study how to generate descriptions of a picture or moving image in natural language, how to inquire large-scale database in natural language, how to obtain an overview from fluctuating stock price data, etc.

We pursue collaborative research on grounding at AI Research Center of AIST. We conduct interdisciplinary research on image processing, machine learning, economics, etc., in collaboration with researchers of various domains. In JST PRESTO project “Development of text analysis technologies that can be linked to non-text data,” we studied on a basic technology to link images or database to natural language analysis.

Semantic Understanding in Dialogue Systems

To have a natural language dialogue with a robot is one of human dreams. The above-mentioned question answering is a part for its realization. However, we humans not only answer a question but also give additional information, make new information in combination with our knowledge, etc. Let us go back to the conversation example in our explanation on Grounding: “Where did you take this picture?” “Tomamu. I visited there last week.” “For skiing?” The speaker actively gives an additional information that he had been there in the previous week without being asked. And the other speaker infers from his knowledge that Tomamu is a famous destination for skiing. Even in this casual conversation we can find various natural language understanding and artificial intelligence technologies, and they are among our greatest hurdles. In particular, understanding human untterances can be considered as a kind of semantic parsing, and is an essential resarch topic in natural language processing.

We participate in the project “Communicative intelligent systems towards a human-machine symbiotic society”, studying fundamental technologies for applying semantic parsing to semantic understanding in dialog systems.

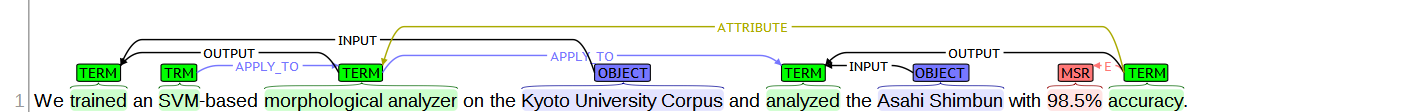

Knowledge Discovery from academic papers

Scientific research results are presented to public as an academic paper, and become a part of our common intellectual property. However, it is getting more and more difficult to find out necessary knowledge as the number of published papers is rapidly increasing. To find necessary knowledge through academic papers, searching by keyword is not enough; we need technologies to understand the relations among various concepts. We studied technologies to analyze the semantic relations of “methods”, “aims”, and “results”, etc. in academic papers. We have built dataset in English and Japanese, and conducted a research on semantic analysis model using this dataset.

Knowledge Discovery from financial datas

In NII Research Center for Financial Smart Data, we pursued research on the transformation of various financial data into “smart data,” and we studied natural language processing technologies to effectively utilize large-scale text data such as financial news texts.

Machine Translation

We applied the achievements of syntactic parsing and semantic analysis to accurate machine translation. Sentence structures and wordings are very different in English, Japanese and Chinese. We established a method to translate those languages at high accuracy by converting sentence structures in one language into those of the target language.

Question Answering

When we would like to know something, we search the Internet using a search engine and try to find an answer in the websites found. However, if there is a well-informed person around us, we would ask him directly. For example, if we ask “Do you know academic conference on natural language processing?” he would answer “In Japan there is the Association for Natural Language Processing, and overseas, Association for Computational Linguistics.” and this will save our time to read the various information on the Internet. We call the system which provide a response directly to a question like above example, as question answering system.

We have participated in NII Artificial Intelligence Project and built dataset on university entrance examinations. Questions for examinations especially in history are benchmarks that require question-answering technologies using various knowledge. We have also built NIILC-QA dataset with questions that can be answered with information on Wikipedia. We have collected not only questions and answers but also additional information such as keywords and queries to clarify the process to find an answer.